[ad_1]

//php echo do_shortcode(‘[responsivevoice_button voice=”US English Male” buttontext=”Listen to Post”]’) ?>

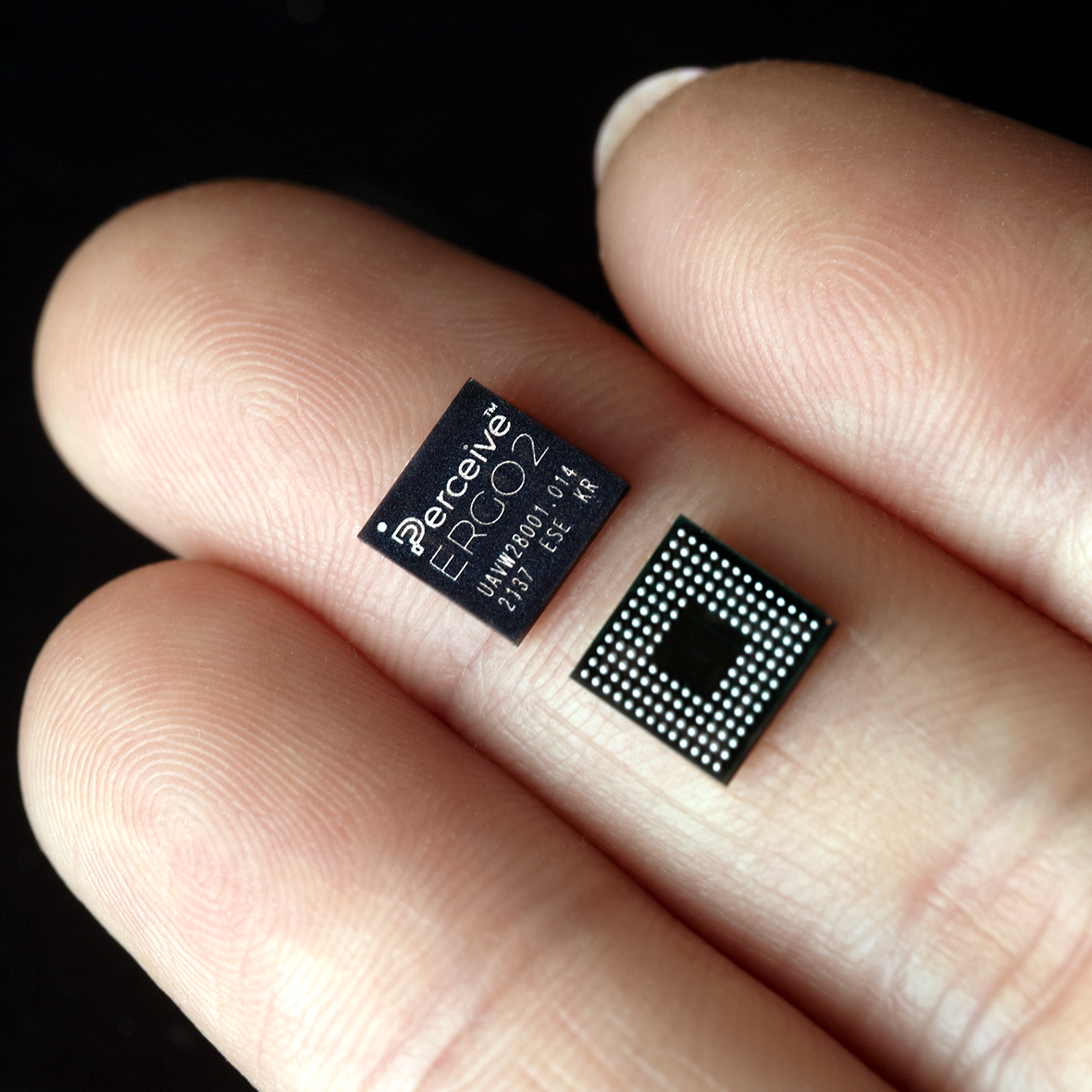

Perceive, the AI chip startup spun out of Xperi, has released a second chip with hardware support for transformers, including large language models (LLMs) at the edge. The company demonstrated sentence completion via RoBERTa, a transformer network with 110 million parameters, on its Ergo 2 chip at CES 2023.

Ergo 2 comes in the same 7mm x 7mm package as the original Ergo, but offers roughly 4× the performance. This performance increase translates to edge inference of transformers with more than 100 million parameters, video processing at higher frame rates or inference of multiple large neural networks at once. For example, the YoloV5-S inference can run at up to 115 inferences per second on Ergo 2; YoloV5-S inference at 30 images per second requires just 75 mW. Power consumption is sub-100 mW for typical applications, or up to 200 mW maximum.

Perceive’s approach to neural network acceleration takes advantage of proprietary model compression techniques combined with a different mathematical representation of neural networks, and hardware acceleration for both.

“The core of our technology is a principled approach to serious compression,” Steve Teig, CEO of Perceive, told EE Times. “That means having a mathematically rigorous strategy for discerning the meaning of the computation and preserving that meaning while representing the neural network in new ways.”

With the compression schemes Perceive is using today, 50-100× compression of models is routinely possible, Teig said.

“We see learning and compression as literally the same thing,” he said. “Both tasks find structure in data and exploit it. The only reason you can compress compressible data is because it’s structured—random data is incompressible…if you can exploit that structure, you can use fewer bits in memory.”

Perceive is using information theory to find that structure—particularly for activations—since it’s activations rather than weights that dominate the memory footprint of most neural networks today. Perceive compresses activations to minimize the memory needed to store them. If it isn’t convenient to compute on compressed activations directly, they can be decompressed when needed, which may be much further down the line depending on the neural network. In the mean time, a bigger portion of the memory is freed up.

Teig said activations may be compressed to reduce their size by a factor of 10, compared to a “trivial” 2-4× that might be possible with quantization, but without a corresponding loss of accuracy. However, compression and quantization are complementary.

Other compression techniques Perceive uses includes reordering parts of the inference computation in space and time. For inference, all dependencies in the computation are known at compile time, which means the inference can be separated into sub-problems. These sub-problems are then rearranged as necessary.

“This enables us to run much larger models than you’d think, because we have enough horsepower, basically we can trade space for time…having a chip as fast as ours means we can save space by doing some computations sequentially, and have them look parallel,” Teig said in a previous interview with EE Times.

Transformer compression

For Ergo 2, Perceive figured out a way to compress transformer models and added hardware support for those compressed models.

How much of Perceive’s advantage is down to manipulation of the workload, and how much is down to hardware acceleration?

“It’s both, but the majority is surely software or math,” Teig said. “It’s our mathematical approach to where the compression is to be found that is number one. Number two is the software perspective, and number three is the representation of the neural networks on the chip and hardware to accelerate [that representation].”

Perceive’s compression tool flow has three parts—macro, micro and compile. Macro finds large scale compression opportunities and exploits them, micro looks for further small-scale opportunities using different compression techniques, and the compile stage manages memory and optimizes for power consumption. Ergo 2’s performance relies on all three.

At the SDK level, Perceive’s software stack retrains Pytorch models to make them compatible with Ergo or Ergo 2. There is also a C library used for post-processing tasks on the chip’s CPU, plus a model zoo of about 20 models customers can build on.

Ergo 2 also features architectural changes—this includes a new unified memory space (the original Ergo had separate memory spaces for the neural network and the on-chip CPU) as well as hardware support for transformers. Teig declined to say how big the new memory space is, but noted that a unified memory space means sub-systems can share the memory more effectively. During the course of an image inference, for example, the entire memory may first be used as a frame buffer. As the neural network digests the image, it can gradually take over the memory as needed, before the CPU uses the same memory for post processing.

Ergo 2 can also accept higher resolution video—MIPI interfaces have been sped up due to customer demand, increasing the highest acceptable resolution from 4K to 12- or 16-megapixel data on Ergo 2. This has also broadened the chip’s appeal to include laptops, tablets, drones and enterprise applications that demand higher resolution video.

Percieve’s original Ergo will still be available for applications that demand the tightest power budgets, while Ergo 2 will support those that require more performance but have a little more power available.

“A battery powered camera with two years of battery life probably wants to use Ergo, but super-resolution to 4K probably wants Ergo 2,” Teig said.

For comparison, Perceive’s figures have Ergo’s power efficiency at 2727 ResNet-50 images per second per Watt, whereas Ergo 2 can do 2465. This is an order of magnitude above competing edge chips.

Future transformers

In Teig’s view, bigger is not better—contrary to current trends for bigger and bigger transformers.

“From a mathematical point of view, the information theoretic complexity of the concept you’re trying to capture is the thing that should determine how big your network is,” he said. “We can show mathematically that a language model that captures the richness of the syntax of English, like GPT, should still be measured in millions, not billions and certainly not trillions of parameters.”

Armed with this knowledge, Perceive will continue working on the compression of transformers to make bigger and bigger networks possible at the edge.

“The compression is there to be taken. The only question is whether we, as a community, not just Perceive, are clever enough to figure out how to extract the underlying meaning of the model, and that’s what we’re observing as we’re presenting ever larger [transformer] models to our technology,” he said. “It is finding ways of compressing them far more than previous models, because the complexity of the underlying concept hasn’t grown very much, it’s only the models used to represent them that are growing a lot.”

But what makes transformers so much more compressible than any other type of neural network?

“If the only words you’re willing to use are matrix multiplication and ReLU, think of how many words it would take to say anything interesting,” he said. “If the only words in your language are those, you’re going to have to talk for a long time to describe a complicated concept, and as soon as you step back from the belief that those are the only words you’re allowed to use, you can do a lot better.”

Teig added that while 50-100× compression is no problem today with Ergo 2, he anticipates future compression factors of 1000 to be within reach, and “maybe even 10,000×,” he said.

[ad_2]