[ad_1]

//php echo do_shortcode(‘[responsivevoice_button voice=”US English Male” buttontext=”Listen to Post”]’) ?>

The semiconductor industry is once again at an inflection point, enjoying a renaissance fueled by disruptive artificial intelligence (AI) applications, such as autonomous driving and large language models (LLMs). LLMs like OpenAI’s ChatGPT have suddenly become prevalent in everyday conversations, as the sophistication of the “chatbot” has seemed to have crossed a threshold of conversational capability. The potential for integration of this technology into various services is seemingly limitless.

This level of intelligence requires analyzing and extracting information from data, powerful data storage, transmission and processing capabilities, posing challenges to the computing capabilities of existing data centers and edge devices. Finances Online estimates that data consumption from 2021-2024 grew, or will grow, from 74 zettabyes (a unit of data equal to one sextillion) in 2021 to 94 zettabytes in 2022, and 118 and 149 in 2023 and 2024, respectively. The numbers are staggering, and current compute power in a data center is having trouble keeping up.

Growing demand for compute power cannot be met by discrete computing systems and large-scale distributed computing systems deployed in data centers.

Sure, engineers continually develop more complex models to analyze and use data—and, ultimately, improve productivity. However, the traditional way of improving compute power is limited by physical principles, and the traditional single-chip computing power path is not sustainable. More innovation is fast becoming an imperative in the IT market—and an opportunity for the semiconductor industry.

Performance growth of traditional computing chips has encountered bottlenecks. Since the start of the semiconductor industry 60 years ago, computing power advances is described by Moore’s Law, the prediction that transistor density doubles every 18 months, and Dennard Scaling, a scaling law that power density remains unchanged as transistor density improves.

The combination of the two allowed chips to continuously improve computing power while maintaining constant energy and area consumption. As the chip manufacturing process moves to 5 nm and 3 nm, the transistor density is close to its the physical limit. Moore’s Law is slowing. Dennard Scaling ended in about 2004, resulting in the “power wall” where power supply and heat dissipation are challenged as transistor density improves. Tape out and design at advanced processes are costlier and create the “cost wall.” The traditional single-chip computing power improvement path is not sustainable.

Of course, incremental efforts have been made to increase and better utilize computing power. Breaking through current bottlenecks and overcoming challenges at the data center requires innovation in underlying technology.

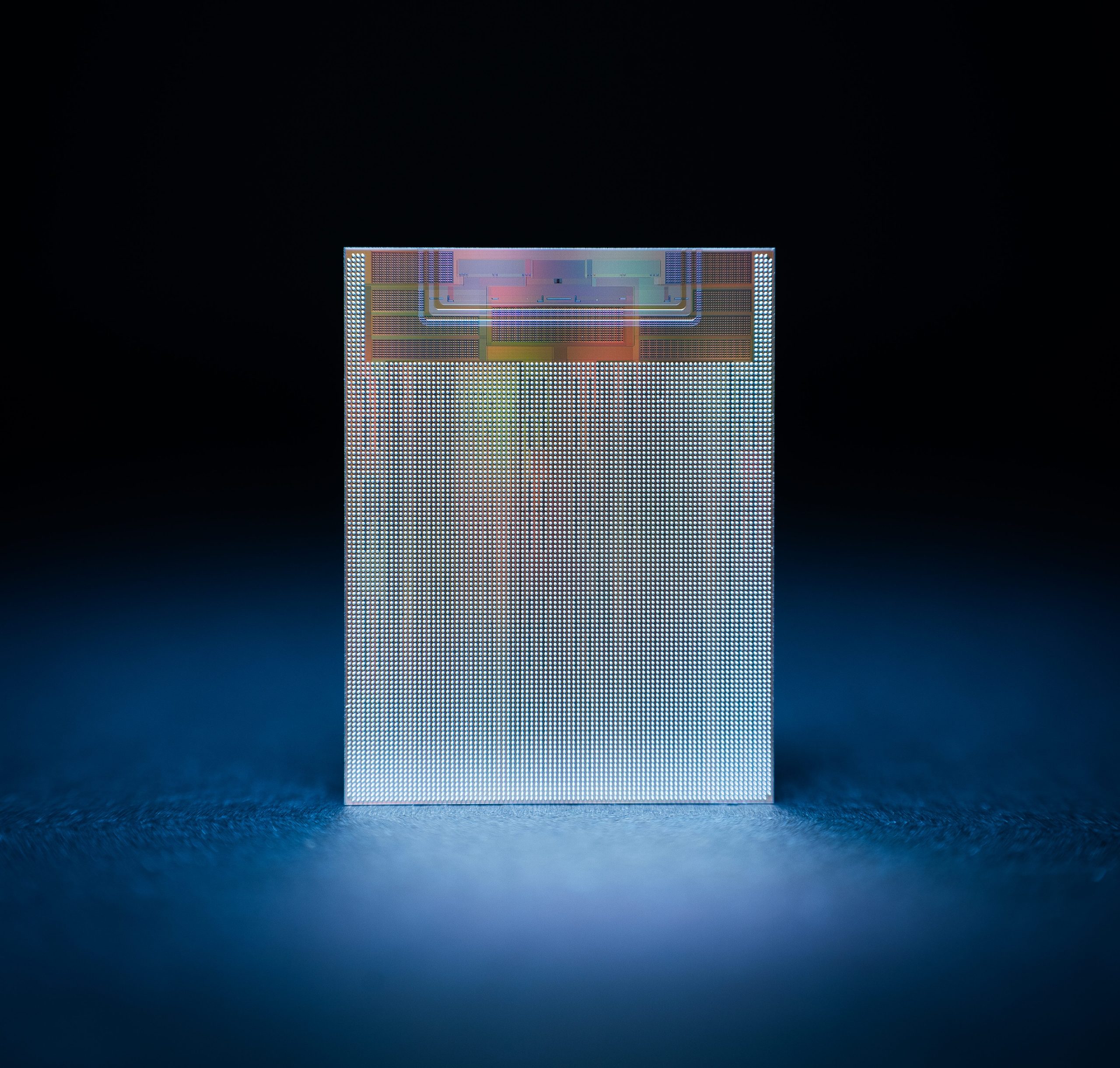

And now comes a novel approach–a data center computing paradigm based on large-scale optoelectronic integration technology for an evolution in next-generation data centers. The result is large-scale optoelectronic integration to replace traditional digital circuits, introducing information processing and interconnection capabilities based on integrated silicon photonics.

An optoelectronic hybrid data center integrating silicon photonics technology combines optical computing, on-chip and inter-chip optical networks, and other technologies, vertically increasing single node computing power (scale-up) and horizontally improving the efficiency of large-scale distributed computing (scale-out). One such solution focuses on a new data center computing paradigm based on silicon photonics.

Even if Moore’s Law and Dennard Scaling continue, the exponential growth of computing power cannot be met by discrete computing systems. Instead, large-scale distributed computing systems must be deployed in data centers as consumption of resources to meet the computing demand increases.

Silicon photonics computing provides a computational power enhancement path beyond Moore’s Law, as wafer-level on-chip optical networks enable the computing paradigm to work effectively with traditional electrical chips and memory chips to improve computing power in a single node. As with all new technologies, the novel computing paradigm will go through a transitional stage in supply chain, ecosystem and business models.

Innovations are needed from low-level components through top-level application software development. Yet, the promise of a new computing paradigm based on silicon photonics is intriguing and can help contain the data center challenges through the end of zettabytes into yottabytes and beyond.

—Maurice Steinman is VP of engineering at Lightelligence.

[ad_2]