[ad_1]

We are all aware of the fact that memory is one of the fundamental units of a computer. In fact, it is a crucial part of almost every device with a built-in processor, whether it is your smartwatch, smartphone, laptop, or computer.

Memory is a crucial computer system component that plays a critical role in its overall functioning. It serves as a temporary storage space for data and instructions, enabling the computer to perform tasks effectively. It basically provides a means to store instructions and data that the computer needs to process. Thus, allowing the computer to access and retrieve information quickly, ensuring the smooth execution of programs.

So, when a computer runs a program, the instructions and data required by the program are loaded into memory first. The processor fetches these instructions from memory and executes them, accessing data as necessary. Therefore, fast and reliable memory is important for flawless performance and system speed.

The performance of a memory unit or RAM can be easily calculated by checking out some of its basic qualities, such as capacity, speed, and type. While most of us might already be familiar with the common RAM technologies available on laptops, such as DDR4, and DDR5, or smartphone memories, such as LPDDR5, a few choices may need a little introduction, such as Unified memory.

Unified memory is more of a system than a particular type of memory, allowing your system components to access data from a single memory pool. In this guide, we will talk more about unified memory and try to understand the system and how it functions on a laptop or a computer.

What is Unified Memory?

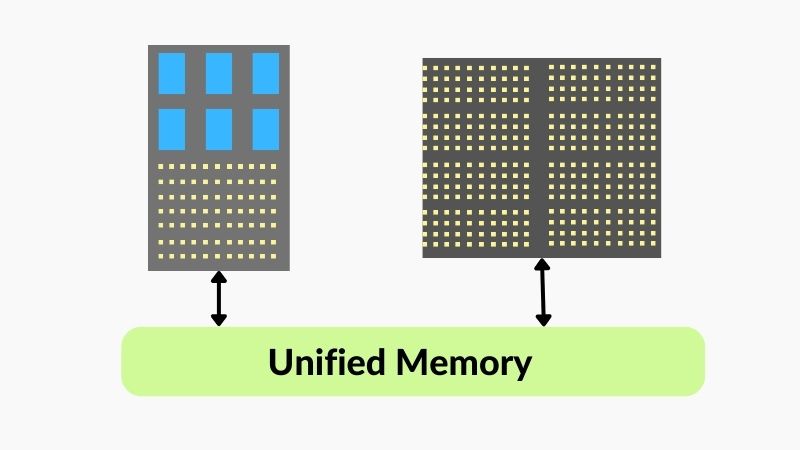

Unified memory, or shared memory, is a memory architecture that allows different components of a computer system, such as the CPU and GPU to access a dedicated memory pool. In traditional systems, the CPU and GPU have separate memory spaces, requiring explicit data transfers.

While the traditional system might be more reliable, it simply isn’t as fast and takes a lot of time, hampering the performance of the CPU or the GPU, especially the newer models. Unified memory, on the other hand, can easily eliminate the need for explicit data transfers and can provide a shared memory space accessible by both the CPU and GPU.

As a result, In a system with unified memory, the CPU and GPU can operate on the same data without manually allocating and copying data between their respective memory spaces. This simplifies the programming model and improves overall system performance by reducing data transfer overhead. Unified memory is particularly advantageous in systems requiring significant data exchange between the CPU and GPU, such as in graphics-intensive applications, scientific simulations, machine learning, parallel computing, and much more.

It also allows developers to write code that seamlessly utilises both the CPU and GPU resources, leading to more efficient utilization of the system’s computational power. Currently, Unified memory is usually implemented using technologies such as shared virtual memory (SVM) or memory management units (MMUs).

Basic Working Principle of Unified Memory

As fancy as it looks, the overall functionality of unified memory is somewhat complicated. To be able to create a single memory pool to contain all of the data, the architecture is designed with certain conditions, and it is executed in a series of steps. Let us try to understand how unified memory works and what are some of the most crucial elements in its overall functionality.

1. Memory Allocation

When a program requests memory allocation, the operating system dynamically assigns memory pages within the unified memory space. The CPU and GPU can access these memory pages without copying the data into their respective memory space. Thus, allocating the unified memory space is the first and the most important step of a unified memory architecture.

2. Migration and Page Faults

Initially, the memory pages assigned to unified memory reside in the system’s main memory or RAM. So, a page fault occurs when a PC component accesses a memory page that is not currently present in its respective memory caches. The page faults are usually observed in CPUs and GPUs since these units are continuously trading data, especially when you’re playing a game or working on powerful software.

If the CPU generates a page fault, the operating system handles it as it would in a traditional memory system. The missing page is fetched from the main memory and made accessible to the CPU, clearing the error without any loss in performance.

Whereas, in a GPU page fault, the fault is trapped, and the GPU notifies the operating system about the error. The operating system then manages the migration of the missing memory page from the CPU’s memory to the GPU’s memory. As you can imagine, managing page faults is also very crucial in unified memory, and without it, the system won’t be anything but memory errors.

3. Coherency

To ensure data consistency, the operating system employs mechanisms to maintain coherence between the CPU and GPU cache data. When a memory page is modified by one processing unit, the modified data is visible to the other processing unit before access. This coherence management prevents conflicts and inconsistencies caused by simultaneous memory access.

4. Data Transfer

Although unified memory eliminates the need for explicit data transfers between the CPU and GPU, data movement between the CPU’s and GPU’s memory still occurs behind the scenes. The operating system transparently handles these data transfers based on access patterns and demand of the game or program you are running.

It’s worth noting that while this is more or less the basic working principle of a unified memory system, the exact implementation and behavior of unified memory can vary depending on the specific hardware. It also changes based on the software framework, and operating system features provided by the system. Developers working with unified memory should refer to the documentation and guidelines specific to their system to ensure optimal utilization and performance.

Functioning of Unified Memory on MAC

Unified memory is one of the best features available on Mac computers. Users can see the difference between the performance of a standard desktop system and a premium-range Mac computer in terms of memory performance. The specific implementation of unified memory on Mac systems is known as “Apple Unified Memory Architecture” (AUM).

To make it possible, Apple’s Metal framework is a low-level, high-performance graphics and computing API for macOS and iOS, that provides the foundation for unified memory utilization on Mac systems. Developers using the Metal framework can also allocate memory using the Metal API, which includes creating buffers and textures. These memory allocations can be designated as “shared” or “managed” memory.

Apple’s AUM also ensures memory coherence and synchronization between the CPU and GPU. When one processing unit modifies a shared memory location, the updated data becomes immediately visible to the other processing unit, thus ensuring data consistency.

Although, the best part of unified memory on MAC systems is the automatic data migration. Here, the operating system manages the data migration between CPU and GPU memory. The system analyzes memory access patterns and dynamically migrates data between the CPU and GPU memory as needed to optimize performance.

Is Unified Memory Fast?

The speed of unified memory is dependent on several factors, including the specific hardware architecture, memory technology, and your overall system configuration. Unified memory itself does not inherently provide a performance advantage over traditional RAM architecture. But, the performance of unified memory is certainly influenced by various factors, including memory bandwidth, latency, cache hierarchy, and the efficiency of memory management techniques. While unified memory simplifies programming and data sharing between the CPU and GPU, the performance implications can also vary depending on the workload and memory access patterns.

The speed of unified memory is limited by the memory bandwidth available in the system. If the memory bandwidth is bottlenecked, the performance of unified memory will be impacted. The latency of accessing data in unified memory is also influenced by the memory technology used and the distance between the processing units (CPU and GPU) and the memory.

The presence of CPU and GPU caches also affect the unified memory speed and bandwidth of unified memory access. If the required data is available in the caches, the access latency can be reduced compared to accessing it from unified memory.

What are the Differences Between Unified Memory and RAM?

While both RAM and unified memory serve as a memory unit for your unit, you should remember that both technologies are different.

RAM, also known as main memory or primary memory, is a hardware component in a computer system that provides temporary storage for data and instructions that the CPU actively uses during program execution. It is directly accessible by the CPU and is typically faster than secondary storage devices like hard drives or solid-state drives (SSDs). The CPU exclusively uses RAM to load and execute programs, store intermediate results, and hold data being actively processed.

On the other hand, we have unified memory architecture, which allows your computer’s CPU, GPU, and other components to access the memory pool simultaneously. Unlike traditional memory systems where the CPU and GPU need separate memory spaces, unified memory simplifies programming by eliminating the need for explicit data transfers altogether. It provides a unified view of memory, enabling seamless data sharing and cooperation between the two processing units.

Unified Memory Capacity

Your memory requirements depend on your usage and the type of applications you plan to run on the system. Suppose your work revolves around casual web browsing, using web apps, and industry-standard productivity software. In that case, you can easily get by with an 8 GB unified memory space without much trouble.

But, keep in mind that Apple’s new-gen chips heavily relied on the swap memory architecture, meaning that it may not be the ideal choice in the long run as it can degrade the lifespan of your internal SSD. So the more RAM capacity you have, the lesser your device will have to rely on the storage drive.

However, having up to 16 GB of unified memory space is pretty much becoming a standard nowadays since everyone can find the need for heavier programs in their day-to-day life which requires such unified memory capacity.

While it is still not a lot of capacity, it can still suffice for lightweight and heavy users alike. Just remember that you won’t be able to upgrade the unified memory space available on your system. So, it should be a crucial point to consider when purchasing.

Unified Memory – FAQs

Ans: In general, it is better to go with multiple RAM sticks rather than a single unit with a higher capacity for many reasons. First of all, multiple RAM sticks allow you to take advantage of the multi-channel configuration supported by your CPU and motherboard. Utilizing multiple memory channels, such as dual-channel or quad-channel, can provide higher memory bandwidth and better performance compared to a single-channel configuration. Having multiple sticks would allow your system to function with the remaining operational sticks if one or more are malfunctioning.

Ans: On Apple devices, unified memory refers to a memory architecture and programming model that allows for seamless memory sharing between different processing units. Unified Memory is available on various Apple devices, including Macs with Apple Silicon M1 or later SoCs, and iOS devices, streamlining the memory performance. Apple’s Unified Memory architecture also automatically handles the data transfer between the CPU and GPU memory spaces, optimizing performance and minimizing data transfer latencies.

Ans: Before you can decide which unified memory allocation option is better for you, you should first consider the overall usage of your system and the type of application you would have to use on your computer. If you primarily use your computer for web browsing, word processing, and light multitasking, 8GB of RAM is generally sufficient. If you primarily use your computer for web browsing, word processing, and light multitasking, 8GB of RAM is generally sufficient. Operating systems and software tend to become more resource-intensive over time, so having a larger RAM capacity, like 16GB, can help ensure your computer can handle future software updates and newer applications without experiencing performance bottlenecks.

Conclusion

Unified memory is one of the best advancements observed in memory technology in recent years. With flawless data performance, unified memory manages to make the system a lot faster and you can actually feel the responsiveness of the system and see our tasks getting completed in a flash. But, whether you should go with the traditional RAM option or a Mac with unified memory totally depends upon your needs. In our opinion, new is always better and you should certainly try out the unified memory performance to check out the difference for yourself. You can also have 8 GB unified memory space instead of 16 GB for an affordable choice if your needs aren’t that high.

[ad_2]